Your Hierarchical attention networks github pytorch images are ready. Hierarchical attention networks github pytorch are a topic that is being searched for and liked by netizens today. You can Download the Hierarchical attention networks github pytorch files here. Find and Download all free images.

If you’re searching for hierarchical attention networks github pytorch pictures information linked to the hierarchical attention networks github pytorch topic, you have pay a visit to the right site. Our site always provides you with hints for viewing the highest quality video and picture content, please kindly search and find more enlightening video content and graphics that match your interests.

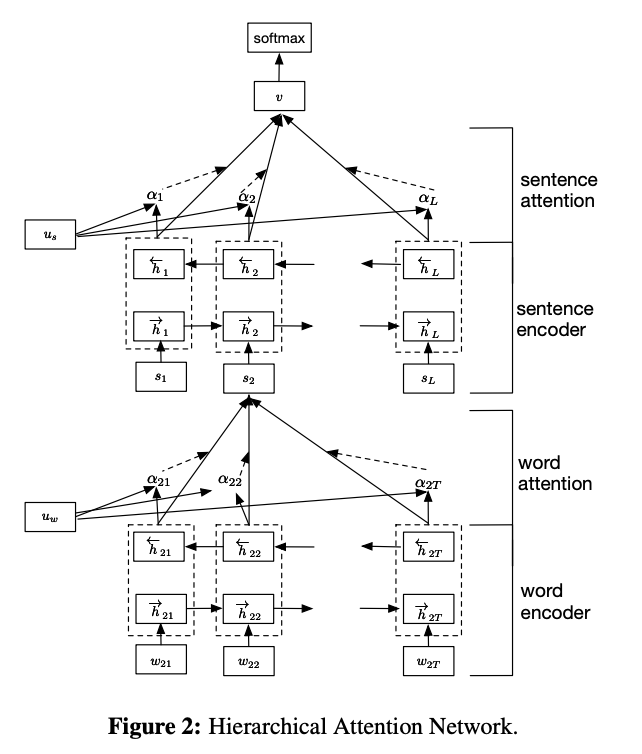

Hierarchical Attention Networks Github Pytorch. I think Im falling sick. Artificial Intelligence 72. Contribute to cmd2001BiDAF-pytorch-with-Self-Attention development by creating an account on GitHub. This paper introduces and evaluates two novel Hierarchical Attention Network models Yang et al 2016 - i Hierarchical Pruned Attention Networks which remove the irrelevant words and sentences from the classification process in order to reduce potential noise in the document classification accuracy and ii Hierarchical.

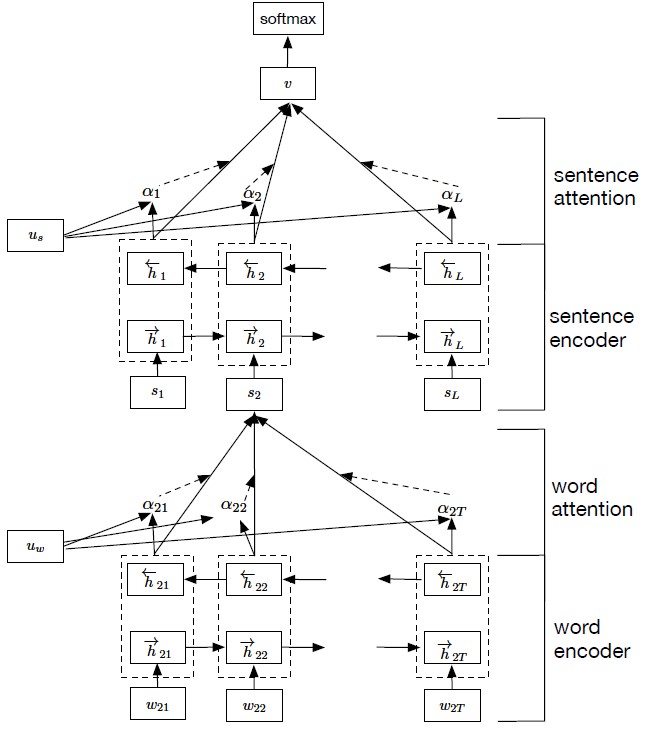

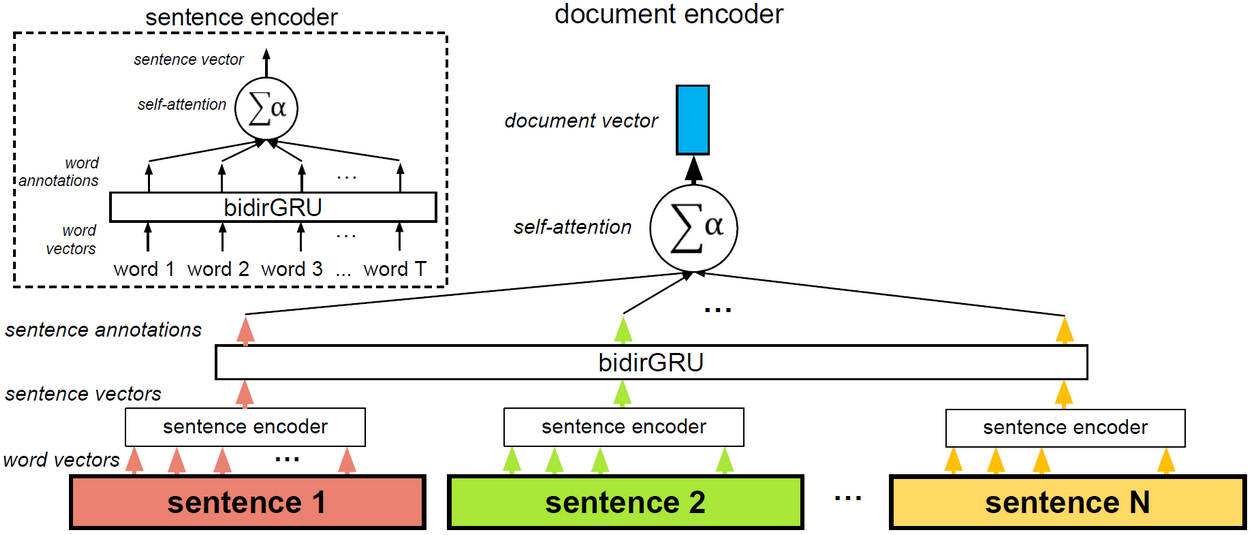

Attention In Neural Networks 15 Hierarchical Attention 1 Buomsoo Kim From buomsoo-kim.github.io

Attention In Neural Networks 15 Hierarchical Attention 1 Buomsoo Kim From buomsoo-kim.github.io

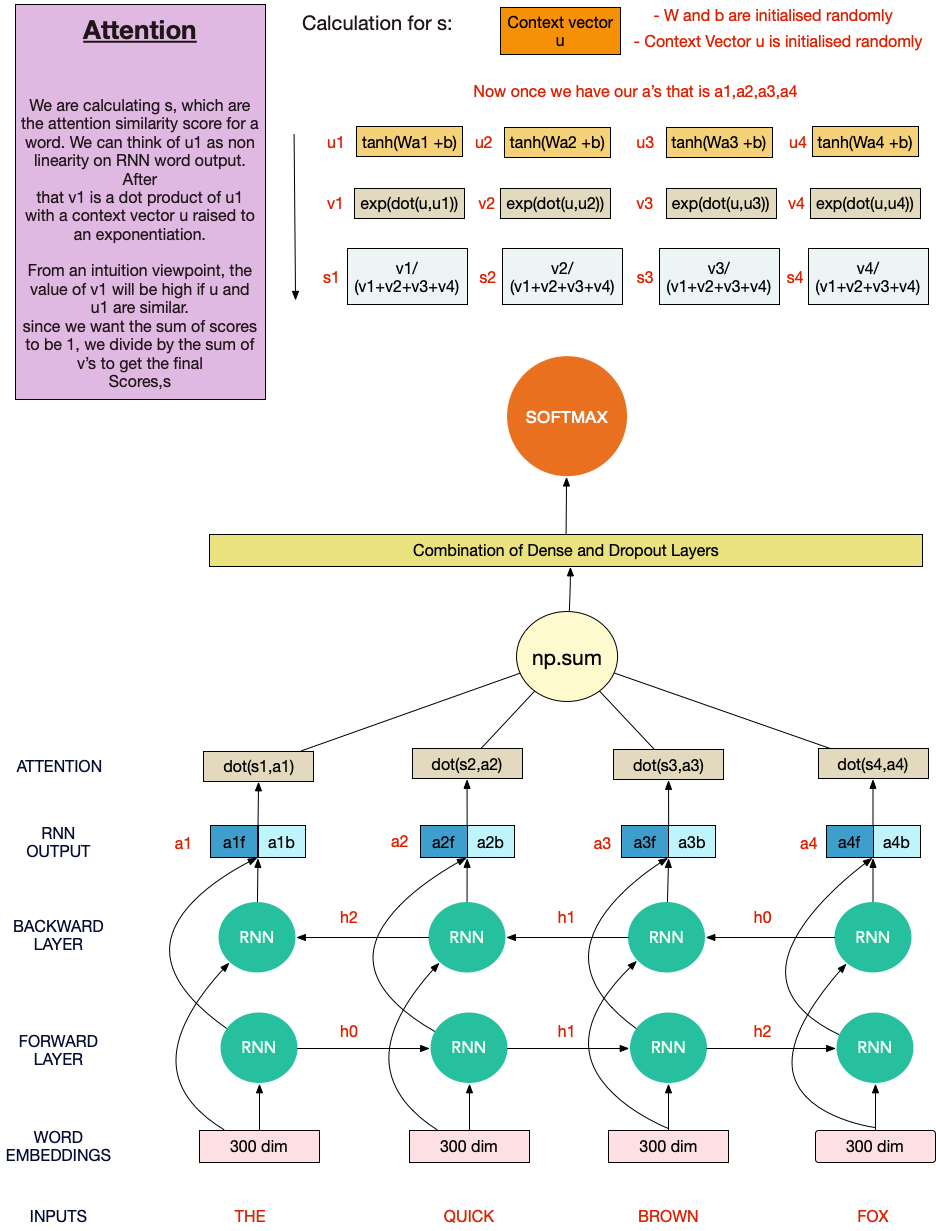

Keras attention hierarchical-attention-networks Updated on Apr 11 2019 Python wslc1314 TextSentimentClassification. Hierarchical Attention Networks for document classification - xrickHierarchical-attention-networks-pytorch. We know that documents have a hierarchical structure words combine to form sentences and sentences combine to form documents. Here is my pytorch implementation of the model described in the paper Hierarchical Attention Networks for Document Classification paper. Run python trainpy Run python testpy to visualize attention. Command Line Interface 48.

Hierarchical Attention Networks for Document Classification with web demo Pytorch implementation 7 comments.

Artificial Intelligence 72. We can try to learn that structure or we can input this hierarchical structure into the model and see if it improves the performance of existing models. 2 code implementations in PyTorch. We know that documents have a hierarchical structure words combine to form sentences and sentences combine to form documents. An Attention-based Recurrent Network Approach. Run python trainpy Run python testpy to visualize attention.

Source: buomsoo-kim.github.io

Source: buomsoo-kim.github.io

PYTORCH Hierarchical Attention Networks for Document Classification Introduction. This paper introduces and evaluates two novel Hierarchical Attention Network models Yang et al 2016 - i Hierarchical Pruned Attention Networks which remove the irrelevant words and sentences from the classification process in order to reduce potential noise in the document classification accuracy and ii Hierarchical. We know that documents have a hierarchical structure words combine to form sentences and sentences combine to form documents. Command Line Interface 48. We know that documents have a hierarchical structure words combine to form sentences and sentences combine to form documents.

Source: giters.com

Source: giters.com

This thread is archived. Run python trainpy Run python testpy to visualize attention. Hierarchical Attention Networks for document classification - xrickHierarchical-attention-networks-pytorch. The main objective of the project is to solve the hierarchical multi-label text classification HMTC problem. Hierarchical-Attention-Network HAN implemented in Pytorch along with attention visualization.

Source: giters.com

Source: giters.com

Deprecated Hierarchical Attention Networks for Document Classification httpswwwcscmuedudiyiydocsnaacl16pdf - in Pytorch. Keras implementation of hierarchical attention network for document classification with options to predict and present attention weights on both word and sentence level. Run python trainpy Run python testpy to visualize attention. We will be implementing the Hierarchial Attention Network HAN one of the more interesting and interpretable text classification models. An Attention-based Recurrent Network Approach.

BiDAF with Self-Attention. Here is my pytorch implementation of the model described in the paper Hierarchical Attention Networks for Document Classification paper. GitHub - cediasHAN-pytorch. This thread is archived. Run python trainpy Run python testpy to visualize attention.

Source: humboldt-wi.github.io

Source: humboldt-wi.github.io

We can try to learn that structure or we can input this hierarchical structure into the model and see if it improves the. Command Line Interface 48. Run python trainpy Run python testpy to visualize attention. We will be implementing the Hierarchial Attention Network HAN one of the more interesting and interpretable text classification models. Hierarchical Attention Network HAN HAN was proposed by Yang et al.

Source: giters.com

Source: giters.com

We know that documents have a hierarchical structure words combine to form sentences and sentences combine to form documents. This thread is archived. New comments cannot be posted and votes. I think Im falling sick. Hierarchical Attention Networks for Document Classification.

Source: reposhub.com

Source: reposhub.com

Hierarchical Attention Network HAN HAN was proposed by Yang et al. We can try to learn that structure or we can input this hierarchical structure into the model and see if it improves the performance of existing models. We can try to learn that structure or we can input this hierarchical structure into the model and see if it improves the performance of existing models. This thread is archived. Import torch from htm_pytorch import HTMAttention attn HTMAttention dim 512 heads 8 number of heads for within-memory attention dim_head 64 dimension per head for within-memory attention topk_mems 8 how many memory chunks to select for mem_chunk_size 32 number of tokens in each memory chunk add_pos_enc True.

GitHub - cediasHAN-pytorch. Key features of HAN that differentiates itself from existing approaches to document classification are 1 it exploits the hierarchical nature of text data and 2 attention mechanism is adapted for document classification. We know that documents have a hierarchical structure words combine to form sentences and sentences combine to form documents. Keras attention hierarchical-attention-networks Updated on Apr 11 2019 Python wslc1314 TextSentimentClassification. Hierarchical Attention Networks for Document Classification with web demo Pytorch implementation Close.

Source: giters.com

Source: giters.com

This paper introduces and evaluates two novel Hierarchical Attention Network models Yang et al 2016 - i Hierarchical Pruned Attention Networks which remove the irrelevant words and sentences from the classification process in order to reduce potential noise in the document classification accuracy and ii Hierarchical. Application Programming Interfaces 120. BiDAF with Self-Attention. Keras attention hierarchical-attention-networks Updated on Apr 11 2019 Python wslc1314 TextSentimentClassification. This model not only classifies a document but also chooses specific parts of the text sentences and individual words that it thinks are most important.

Source: buomsoo-kim.github.io

Source: buomsoo-kim.github.io

The main objective of the project is to solve the hierarchical multi-label text classification HMTC problem. Contribute to cmd2001BiDAF-pytorch-with-Self-Attention development by creating an account on GitHub. Key features of HAN that differentiates itself from existing approaches to document classification are 1 it exploits the hierarchical nature of text data and 2 attention mechanism is adapted for document classification. Hierarchical Multi-label Text Classification. This paper exploits that structure to build a.

Command Line Interface 48. Keras implementation of hierarchical attention network for document classification with options to predict and present attention weights on both word and sentence level. We know that documents have a hierarchical structure words combine to form sentences and sentences combine to form documents. This paper exploits that structure to build a. Key features of HAN that differentiates itself from existing approaches to document classification are 1 it exploits the hierarchical nature of text data and 2 attention mechanism is adapted for document classification.

The main objective of the project is to solve the hierarchical multi-label text classification HMTC problem. Contribute to cmd2001BiDAF-pytorch-with-Self-Attention development by creating an account on GitHub. Command Line Interface 48. Hierarchical-Attention-Network - Implementation of Hierarchical Attention Networks in PyTorch. Run python trainpy Run python testpy to visualize attention.

Source: kaggle.com

Source: kaggle.com

Application Programming Interfaces 120. We know that documents have a hierarchical structure words combine to form sentences and sentences combine to form documents. Artificial Intelligence 72. We know that documents have a hierarchical structure words combine to form sentences and sentences combine to form documents. Hierarchical Attention Networks for Document Classification with web demo Pytorch implementation Close.

Source: giters.com

Source: giters.com

Import torch from htm_pytorch import HTMAttention attn HTMAttention dim 512 heads 8 number of heads for within-memory attention dim_head 64 dimension per head for within-memory attention topk_mems 8 how many memory chunks to select for mem_chunk_size 32 number of tokens in each memory chunk add_pos_enc True. Artificial Intelligence 72. Code Quality 28. An Attention-based Recurrent Network Approach. Keras implementation of hierarchical attention network for document classification with options to predict and present attention weights on both word and sentence level.

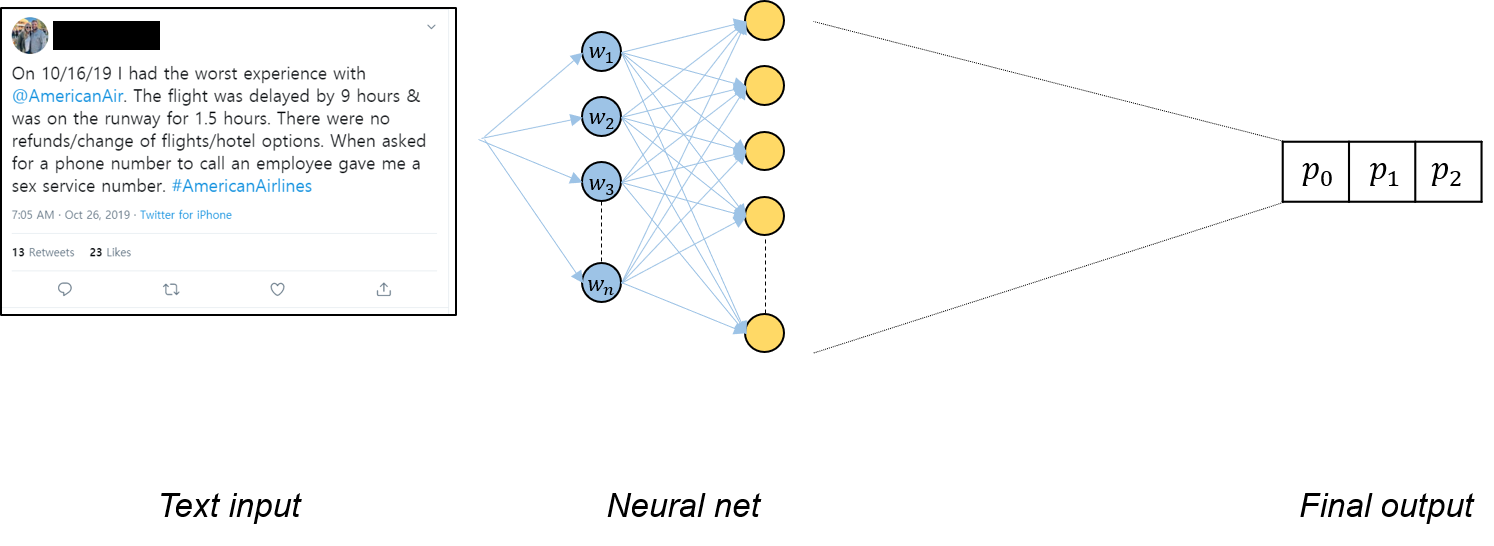

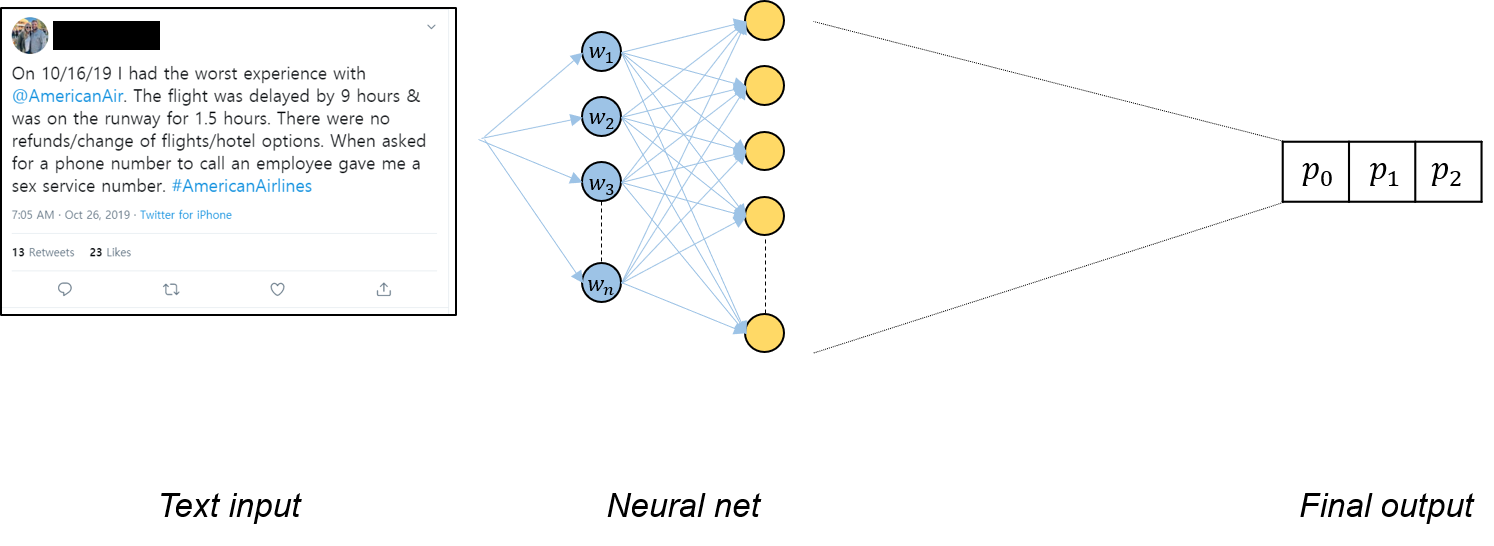

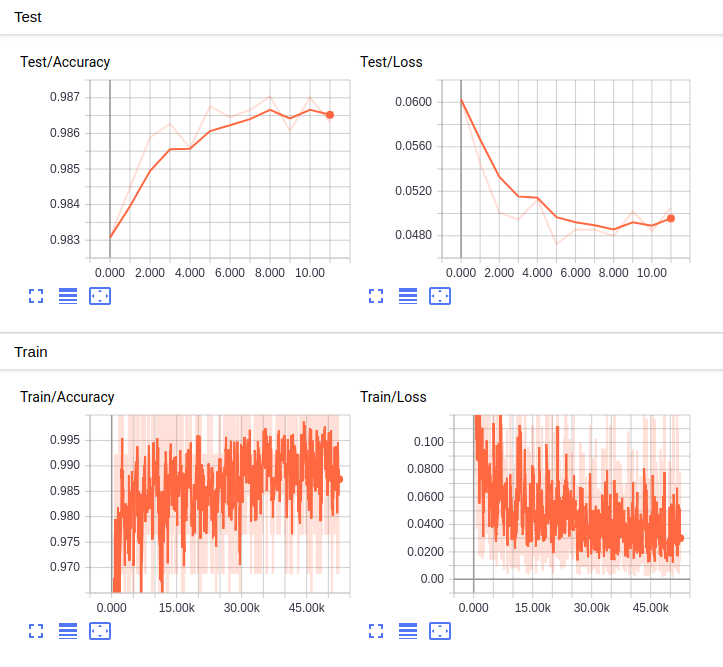

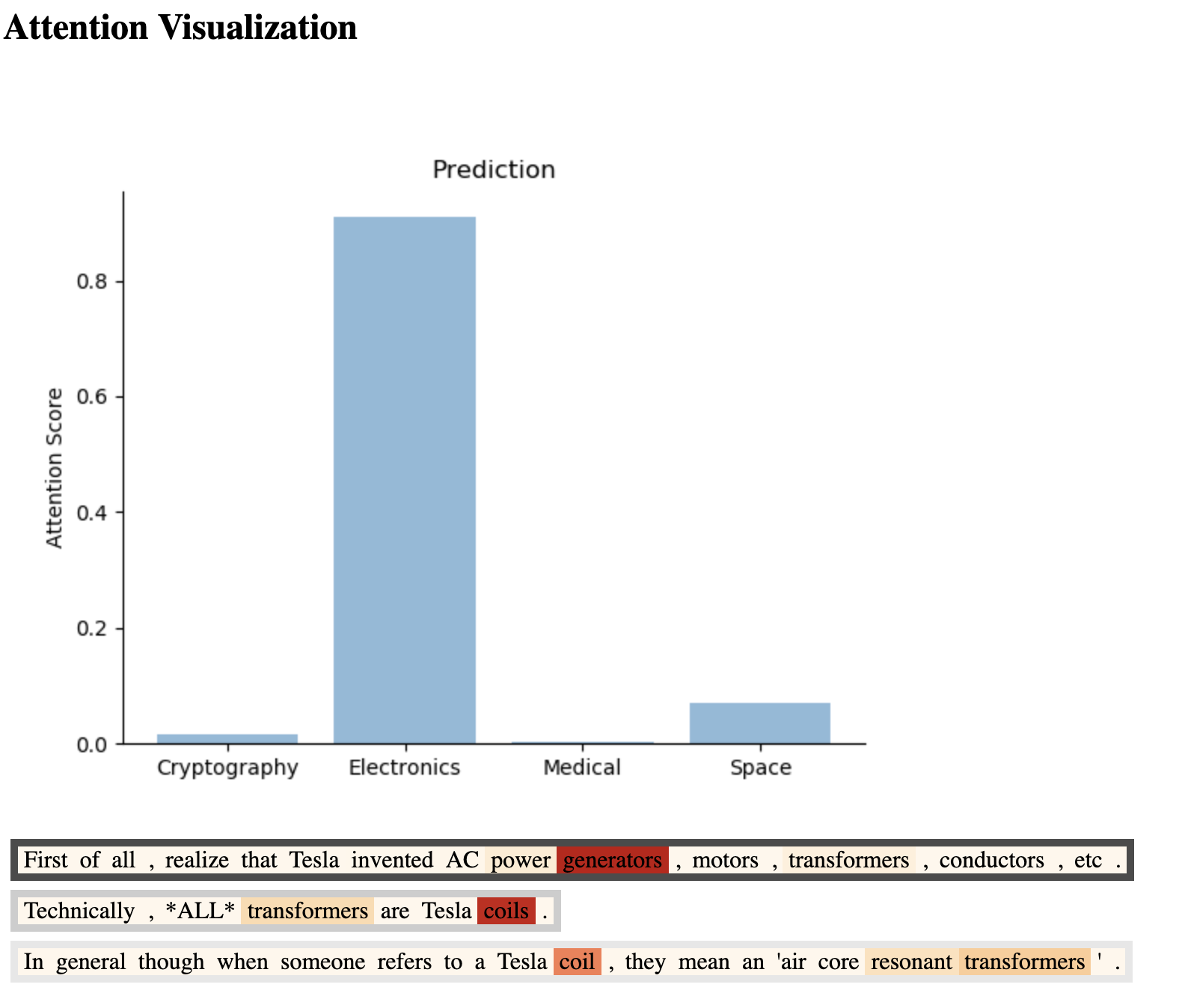

An example of app demo. An example of app demo for my models output for Dbpedia dataset. We can try to learn that structure or we can input this hierarchical structure into the model and see if it improves the performance of existing models. Command Line Interface 48. Key features of HAN that differentiates itself from existing approaches to document classification are 1 it exploits the hierarchical nature of text data and 2 attention mechanism is adapted for document classification.

Source: github.com

Source: github.com

We can try to learn that structure or we can input this hierarchical structure into the model and see if it improves the performance of existing models. We know that documents have a hierarchical structure words combine to form sentences and sentences combine to form documents. This paper exploits that structure to build a. PYTORCH Hierarchical Attention Networks for Document Classification Introduction. We will be implementing the Hierarchial Attention Network HAN one of the more interesting and interpretable text classification models.

Source: pythonawesome.com

Source: pythonawesome.com

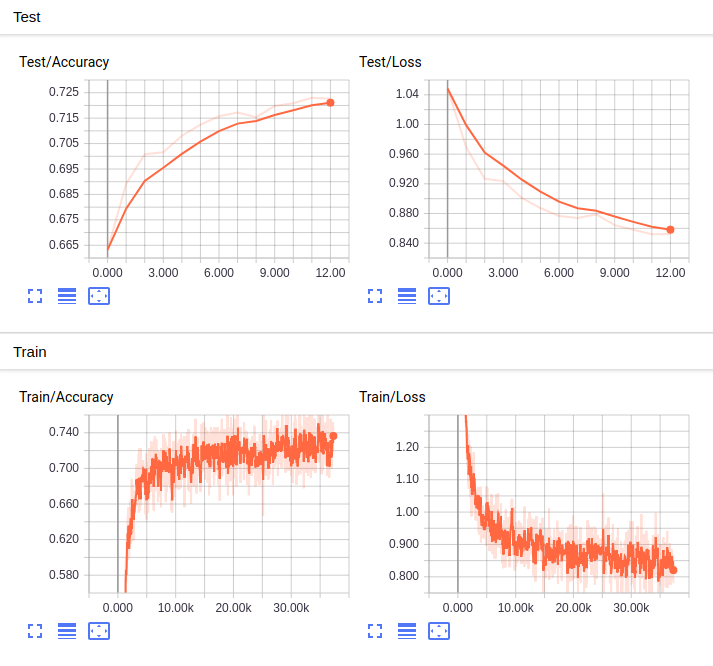

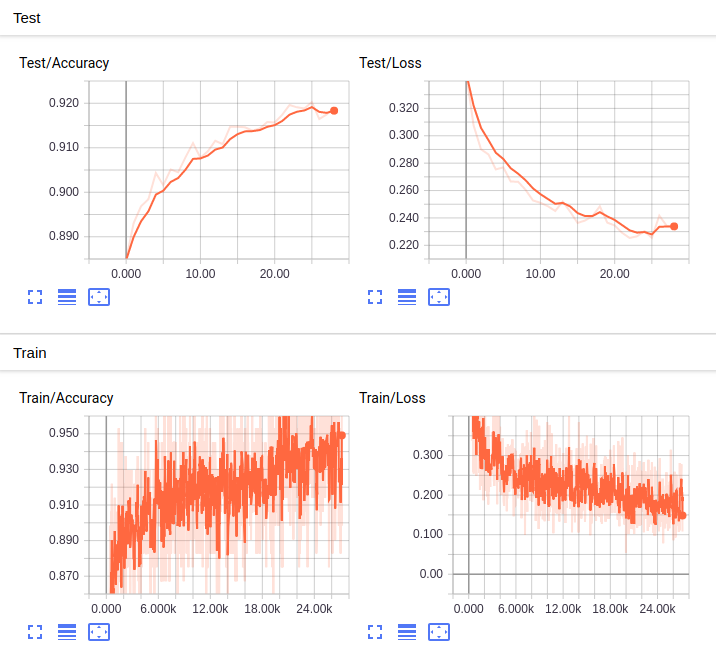

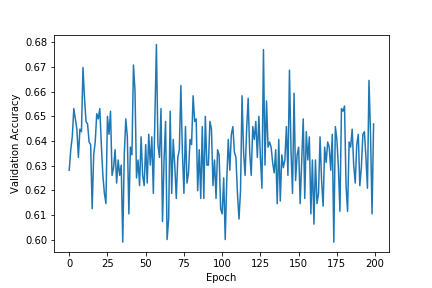

Cloud Computing 79. We can try to learn that structure or we can input this hierarchical structure into the model and see if it improves the. Build Tools 111. An example of my models performance for Dbpedia dataset. Here is my pytorch implementation of the model described in the paper Hierarchical Attention Networks for Document Classification paper.

Code Quality 28. Hierarchical Attention Networks for Document Classification. Hierarchical Attention Networks for Document Classification with web demo Pytorch implementation. Keras implementation of hierarchical attention network for document classification with options to predict and present attention weights on both word and sentence level. This paper exploits that structure to build a.

This site is an open community for users to do submittion their favorite wallpapers on the internet, all images or pictures in this website are for personal wallpaper use only, it is stricly prohibited to use this wallpaper for commercial purposes, if you are the author and find this image is shared without your permission, please kindly raise a DMCA report to Us.

If you find this site convienient, please support us by sharing this posts to your favorite social media accounts like Facebook, Instagram and so on or you can also save this blog page with the title hierarchical attention networks github pytorch by using Ctrl + D for devices a laptop with a Windows operating system or Command + D for laptops with an Apple operating system. If you use a smartphone, you can also use the drawer menu of the browser you are using. Whether it’s a Windows, Mac, iOS or Android operating system, you will still be able to bookmark this website.